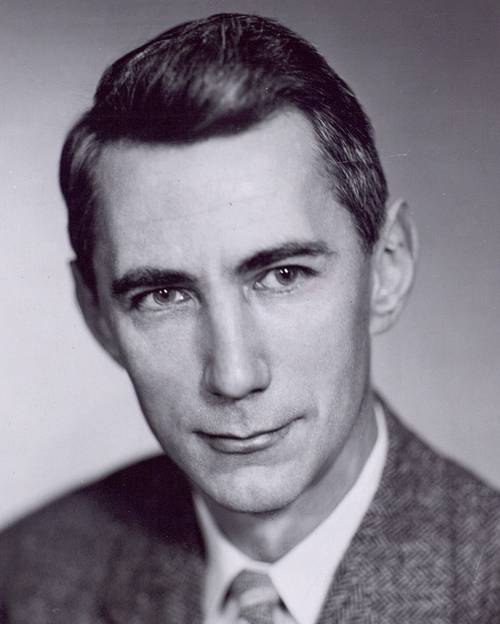

FAQ About Claude Shannon

What is Shannon's entropy?

Shannon's entropy is a measure of uncertainty or randomness in information theory. It quantifies the amount of surprise or unpredictability in a set of possible messages and is used to determine the efficiency of data encoding schemes. High entropy indicates a high degree of unpredictability.