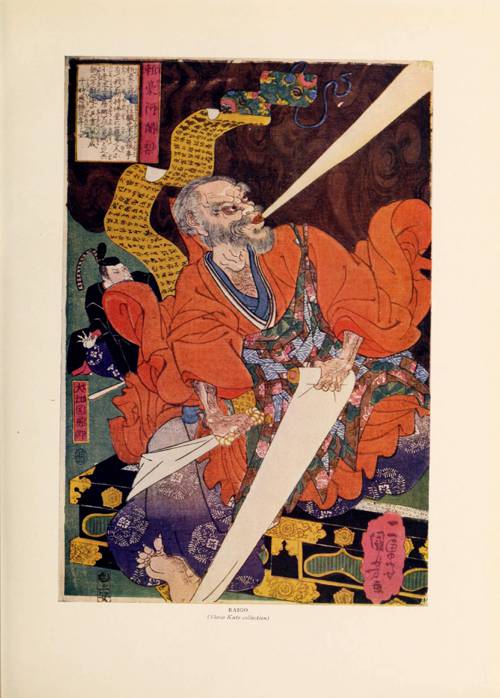

FAQ About The Evolution of Japonism in Western Art

How did Japonism affect Western perceptions of Japan?

Japonism helped shape Western perceptions of Japan as a land of sophisticated art and culture. However, it also sometimes led to stereotypes and misconceptions, with Western views often romanticizing and simplifying Japanese culture.