FAQ About The Role of Westerns in Film and Cultural Identity

How did Western films impact American cultural identity?

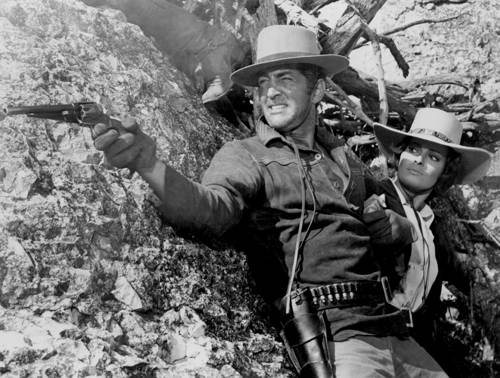

Western films have played a significant role in shaping American cultural identity by romanticizing the frontier era and embodying values such as rugged individualism, bravery, and freedom. These films contributed to the creation of American myths about frontier life, the cowboy figure, and the struggle between progress and the untamed wilderness. They have become a metaphor for American ideals and influenced many aspects of American culture, including literature, art, and national self-perception.