FAQ About The Role of Westerns in Film and Cultural Identity

Why are Western films significant in American history?

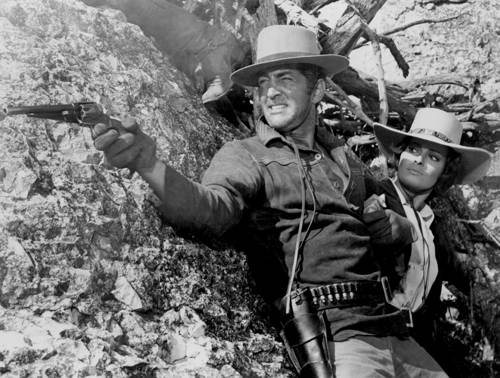

Western films hold significance in American history because they reflect and shape societal values, ideals, and myths related to the American frontier experience. They have contributed to the romanticization of the frontier, the pioneer spirit, and narratives around Manifest Destiny. As cultural artifacts, they offer insights into how different periods in American history have interpreted their past.