FAQ About The Role of Westerns in Film and Cultural Identity

How do Westerns portray Native Americans?

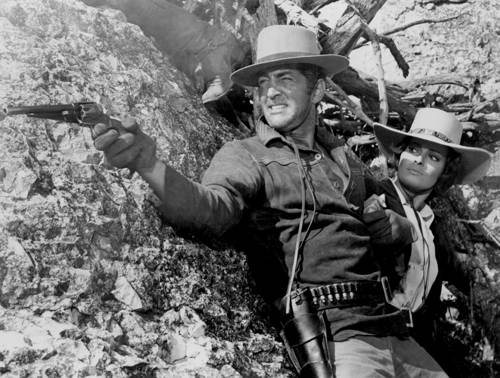

Traditional Westerns often portrayed Native Americans in stereotypical and negative roles, frequently depicting them as antagonists to civilized settlers. However, more recent Westerns have sought to offer more nuanced and respectful portrayals, acknowledging the injustices Native Americans faced and providing more balanced historical perspectives. These portrayals are part of broader efforts to critically reassess historical narratives within the genre.